76: From C to Rust on Mobile

Meta Tech Podcast •

What happens when decades-old C code, powering billions of daily messages, starts to slow down innovation? In this episode, we talk to Meta engineers Elaine and Buping, who are in the midst of a bold, incremental rewrite of one of our core messaging libraries—in Rust. Neither came into the project as Rust experts, but both saw a chance to improve not just performance, but developer experience across the board.

We dig into the technical and human sides of the project: why they took it on, how they’re approaching it without a guaranteed finish line, and what it means to optimise for something as intangible (yet vital) as developer happiness. If you’ve ever wrestled with legacy code or wondered what it takes to modernise systems at massive scale, this one’s for you.

Got feedback? Send it to us on Threads (https://threads.net/@metatechpod), Instagram (https://instagram.com/metatechpod) and don’t forget to follow our host Pascal (https://mastodon.social/@passy, https://threads.net/@passy_). Fancy working with us? Check out https://www.metacareers.com/.

Timestamps

-

Intro 0:06

-

Introduction Elaine 1:54

-

Introduction Buping 2:49

-

Team mission 3:15

-

Scale of messaging at Meta 3:40

-

State of native code on Mobile 4:40

-

Why C, not C++? 7:13

-

Challenges of working with C 10:09

-

State of Rust on Mobile 18:10

-

Why choose Rust? 23:36

-

Prior Rust experience 28:55

-

Learning Rust at Meta 34:14

-

Challenges of the migration 37:47

-

Measuring success 42:09

-

Hobbies 45:15

-

Outro 46:41

AI hot takes and debates: Autonomy

Practical AI •

Can AI-driven autonomy reduce harm, or does it risk dehumanizing decision-making? In this “AI Hot Takes & Debates” series episode, Daniel and Chris dive deep into the ethical crossroads of AI, autonomy, and military applications. They trade perspectives on ethics, precision, responsibility, and whether machines should ever be trusted with life-or-death decisions. It’s a spirited back-and-forth that tackles the big questions behind real-world AI.

Featuring:

Links:

- The Concept of "The Human" in the Critique of Autonomous Weapons

- On the Pitfalls of Technophilic Reason: A Commentary on Kevin Jon Heller’s “The Concept of ‘the Human’ in the Critique of Autonomous Weapons”

Sponsors:

- Outshift by Cisco: AGNTCY is an open source collective building the Internet of Agents. It's a collaboration layer where AI agents can communicate, discover each other, and work across frameworks. For developers, this means standardized agent discovery tools, seamless protocols for inter-agent communication, and modular components to compose and scale multi-agent workflows.

Fri. 06/27 – The Death Of The Blue Screen Of Death

Techmeme Ride Home •

Mark Zuckerberg’s big AI plan seems still to be such a work in progress, he’s even considering abandoning Llama. Apple attempts to comply with the EU’s DMA. Instagram and TikTok want to follow YouTube to your TV. The infamous Blue Screen of Death is dying. And, of course, the Weekend Longreads Suggestions.

Links:

- In Pursuit of Godlike Technology, Mark Zuckerberg Amps Up the A.I. Race (NYTimes)

- Meta says it’s winning the talent war with OpenAI (The Verge)

- Apple announces sweeping App Store changes in the EU (9to5Mac)

- Google launches Doppl, a new app that lets you visualize how an outfit might look on you (TechCrunch)

- TikTok, Instagram Plot TV Apps Following YouTube’s Success (The Information)

- Windows is getting rid of the Blue Screen of Death after 40 years (The Verge)

Weekend Longreads Suggestions:

- My Couples Retreat With 3 AI Chatbots and the Humans Who Love Them (Wired)

- AI is ruining houseplant communities online (The Verge)

See Privacy Policy at https://art19.com/privacy and California Privacy Notice at https://art19.com/privacy#do-not-sell-my-info.

Doug, Dylan and Jon on Lip-Bu, Labubu, AI Salaries, and Bees

ChinaTalk •

Inside Cerebral Valley: Autonomous Vehicles & AI Investment

The Cerebral Valley Podcast •

Today on the pod, we're bringing you two of the liveliest panels from the 2025 Cerebral Valley AI Summit, held this week in London.

Both panels — “The Autonomous Vehicle Rollout” and “Investing in 2030” — explore one of the major themes from the event: where AI is poised to show up next in our everyday lives, beyond the chatbot. Think voice, devices, and even your car.

First up, we'll hear from Uber CEO, Dara Khosrowshahi, and Alex Kendall, Co-founder and CEO of Wayve, who are teaming up to bring self-driving cars to the UK.

Then we turn to the investor perspective, with top European VCs — Philippe Botteri of Accel, Tom Hulme of Google Ventures, and Jan Hammer of Index Ventures — on where they see the biggest AI opportunities for founders in the years ahead.

AI’s Unsung Hero: Data Labeling and Expert Evals

AI + a16z •

Labelbox CEO Manu Sharma joins a16z Infra partner Matt Bornstein to explore the evolution of data labeling and evaluation in AI — from early supervised learning to today’s sophisticated reinforcement learning loops.

Manu recounts Labelbox’s origins in computer vision, and then how the shift to foundation models and generative AI changed the game. The value moved from pre-training to post-training and, today, models are trained not just to answer questions, but to assess the quality of their own responses. Labelbox has responded by building a global network of “aligners” — top professionals from fields like coding, healthcare, and customer service, who label and evaluate data used to fine-tune AI systems.

The conversation also touches on Meta’s acquisition of Scale AI, underscoring how critical data and talent have become in the AGI race.

Here's a sample of Manu explaining how Labelbox was able to transition from one era of AI to another:

It took us some time to really understand like that the world is shifting from building AI models to renting AI intelligence. A vast number of enterprises around the world are no longer building their own models; they're actually renting base intelligence and adding on top of it to make that work for their company. And that was a very big shift.

But then the even bigger opportunity was the hyperscalers and the AI labs that are spending billions of dollars of capital developing these models and data sets. We really ought to go and figure out and innovate for them. For us, it was a big shift from the DNA perspective because Labelbox was built with a hardcore software-tools mindset. Our go-to market, engineering, and product and design teams operated like software companies.

But I think the hardest part for many of us, at that time, was to just make the decision that we're going just go try it and do it. And nothing is better than that: "Let's just go build an MVP and see what happens."

Follow everyone on X:

Check out everything a16z is doing with artificial intelligence here, including articles, projects, and more podcasts.

Legendary Consumer VC Predicts The Future Of AI Products

Y Combinator Startup Podcast •

Kirsten Green, founder of Forerunner Ventures, has backed some of the most iconic consumer brands of the past two decades — from Warby Parker to Chime to Dollar Shave Club.

In this conversation with Garry, she shares how great products (not marketing tricks) still win, why AI is unlocking a new kind of emotional relationship between consumers and technology, and what founders can learn from the messy creative stage we're in right now. She also breaks down how shifts in distribution, wellness, and digital behavior are reshaping what it means to build for real human needs.

Henrique Malvar - Episode 71

ACM ByteCast •

In this episode of ACM ByteCast, Rashmi Mohan hosts Henrique Malvar, a signal processing researcher at Microsoft Research (Emeritus). He spent more than 25 years at Microsoft as a distinguished engineer and chief scientist, leading the Redmond, Washington lab (managing more than 350 researchers). At Microsoft, he contributed to the development of audio coding and digital rights management for the Windows Media Audio, Windows Media Video, and to image compression technologies, such as HD Photo/JPEG XR formats and the RemoteFX bitmap compression, as well as to a variety of tools for signal analysis and synthesis. Henrique is also an Affiliate Professor at the Electrical and Computer Engineering Department at the University of Washington and a member of the National Academy of Engineers. He has published over 180 articles, has been issued over 120 patents, and has been the recipient for countless awards for his service. Henrique explains his early love of electrical engineering, building circuits from an early age growing up in Brazil, and later fulfilling his dream of researching digital signal processing at MIT. He describes his work as Vice President for Research and Advanced Technology at PictureTel, one of the first commercial videoconferencing product companies (later acquired by Polycom) and stresses the importance of working with customers to solve a variety of technical challenges. Henrique also shares his journey at Microsoft, including working on videoconferencing, accessibility, and machine learning products. He also offers advice to aspiring researchers and emphasizes the importance of diversity to research and product teams.

Episode 51: Why We Built an MCP Server and What Broke First

Vanishing Gradients •

How We Built Our AI Email Assistant: A Behind-the-Scenes Look at Cora

AI & I •

You don’t need to handle your inbox anymore. It’s Cora’s job now.

Cora is the AI chief of staff we built for your email at Every. It’s been in private beta for the last 6 months and currently manages email for 2,500 beta users—and today we’re making it available for anyone to use. Start your free 7-day trial by going to: https://cora.computer/

Cora is the $150K executive assistant that costs $15/month. Or $20/month if you want an Every subscription, too. This is what that actually means:

- Cora understands what’s important to you, screens your inbox, and only lets the most relevant emails through.

- The rest of your emails are summarized in a beautifully designed brief that’s sent to you twice a day.

- If it has enough context, Cora drafts replies for you in your voice.

- You can talk to Cora like you would your chief of staff—you can give it special instructions on how you want certain emails handled, ask it to summarize things, and even give you an opinion on complex decisions.

In this episode of AI & I, I sat down with the team behind Cora—Brandon Gell, head of the product studio; Kieran Klaassen, Cora’s general manager; and Nityesh Agarwal, engineer at Cora—for a closer look at how it all came together. We talk about:

- The story of the first time Brandon, Kieran, and I used Cora, while sipping wine at the Every retreat in Nice.

- The evolution of Cora’s categorization system, from a 4-hour vibe-coded prototype to a multi-faceted product with thousands of happy users.

- The features on Cora’s roadmap we’re most excited about: a unified brief across different email accounts, an iOS app, and an even more powerful assistant.

This is a must-watch if you’re curious about what it feels like to give Cora your inbox, and take back your life. Go to https://cora.computer/ to start your 7-day free trial now.

If you found this episode interesting, please like, subscribe, comment, and share!

Want even more?

Sign up for Every to unlock our ultimate guide to prompting ChatGPT here: https://every.ck.page/ultimate-guide-to-prompting-chatgpt. It’s usually only for paying subscribers, but you can get it here for free.

Sponsor: Experience high quality AI video generation with Google's most capable video model: Veo 3. Try it in the Gemini app at gemini.google with a Google AI Pro plan or get the highest access with the Ultra plan.

To hear more from Dan Shipper:

- Subscribe to Every: https://every.to/subscribe

- Follow him on X: https://twitter.com/danshipper

Timestamps:

- Introduction: 00:01:40

- Three ways Cora transforms your inbox (and your day): 00:04:21

- A live walkthrough of Cora’s features: 00:05:09

- The inside story of the first time Kieran, Brandon, and Dan used Cora: 00:12:13

- Train Cora like you would a trusted chief of staff: 00:16:30

- The AI tools that blew our minds while building Cora: 00:27:25

- How we build workflows that compound with AI at Every: 00:30:34

- The dream features that we’d like to put on Cora’s roadmap: 00:42:36

Links to resources mentioned in the episode:

- Try Cora now with a 7-day free trial: cora.computer

- The episode about how Kieran and Nityesh use Claude Code to build Cora: "How Two Engineers Ship Like a Team of 15 With AI Agents"

Claude Is Learning to Build Itself - Anthropic’s Michael Gerstenhaber on Agentic AI

Superhuman AI: Decoding the Future •

AI is evolving faster than anyone expected and we may already be seeing the early signs of superintelligence.

In this episode of the Superhuman AI Podcast, we sit down with Michael Gerstenhaber, Head of Product at Anthropic (makers of Claude AI), to explore:

- How AI models have transformed in just one year

- Why coding is the ultimate benchmark for AI progress

- How Claude is learning to code through agentic loops

- What "post-language model" intelligence might look like

- Whether superintelligence is already here — and we’ve just missed it

From autonomous coding to the rise of AI agents, this episode offers a glimpse into the next phase of artificial intelligence and how it could change everything.

Subscribe for deep conversations on AI, agents, and the future of intelligence.

Learn more about the Google for Startups Cloud Program here:

https://cloud.google.com/startup/apply?utm_source=cloud_sfdc&utm_medium=et&utm_campaign=FY21-Q1-global-demandgen-website-cs-startup_program_mc&utm_content=superhuman_dec&utm_term=-

Better Value Sooner Safer Happier • Simon Rohrer & Eduardo da Silva

GOTO - The Brightest Minds in Tech •

This interview was recorded for the GOTO Book Club.

http://gotopia.tech/bookclub

Read the full transcription of the interview here

Simon Rohrer - Co-Author of "Better Value Sooner Safer Happier" & Senior Director at Saxo Bank

Eduardo da Sliva - Independent Consultant on Organization, Architecture, and Leadership Modernization

RESOURCES

Simon

https://bsky.app/profile/simon.bvssh.com

https://mastodon.social/@simonr

https://x.com/sirohrer

https://www.linkedin.com/in/simonrohrer

https://github.com/sirohrer

https://www.soonersaferhappier.com

Eduardo

https://bsky.app/profile/esilva.net

https://mastodon.social/@eduardodasilva

https://x.com/emgsilva

https://www.linkedin.com/in/emgsilva

https://github.com/emgsilva

https://esilva.net

DESCRIPTION

Eduardo da Silva and Simon Rohrer discuss the core ideas of "Better Value Sooner Safer Happier" diving into the principles of organizational transformation.

Simon shares insights on the shift from output-driven to outcome-focused thinking, emphasizing value over productivity, and the need for continuous improvement in delivery speed, stakeholder satisfaction, and safety.

The conversation explores key concepts like technical excellence, integrating safety into development, and balancing incremental changes with occasional larger steps.

Simon Rohrer discusses organizational patterns and the importance of decentralizing decision-making, recommending a flexible, context-driven approach to transformation. The session concludes with practical advice on how to start implementing these ideas, using the book’s map to guide organizations toward the right transformation strategy based on their specific goals.

RECOMMENDED BOOKS

Jonathan Smart, Zsolt Berend, Myles Ogilvie & Simon Rohrer • Sooner Safer Happier

Stephen Fishman & Matt McLarty • Unbundling the Enterprise

Carliss Y. Baldwin • Design Rules, Vol. 2

Matthew Skelton & Manuel Pais • Team Topologies

Forsgren, Humble & Kim • Accelerate: The Science of Lean Software and DevOps

Kim, Humble, Debois, Willis & Forsgren • The DevOps Handbook

Bluesky

Twitter

Instagram

LinkedIn

Facebook

CHANNEL MEMBERSHIP BONUS

Join this channel to get early access to videos & other perks:

https://www.youtube.com/channel/UCs_tLP3AiwYKwdUHpltJPuA/join

Looking for a unique learning experience?

Attend the next GOTO conference near you! Get your ticket: gotopia.tech

SUBSCRIBE TO OUR YOUTUBE CHANNEL - new videos posted daily!

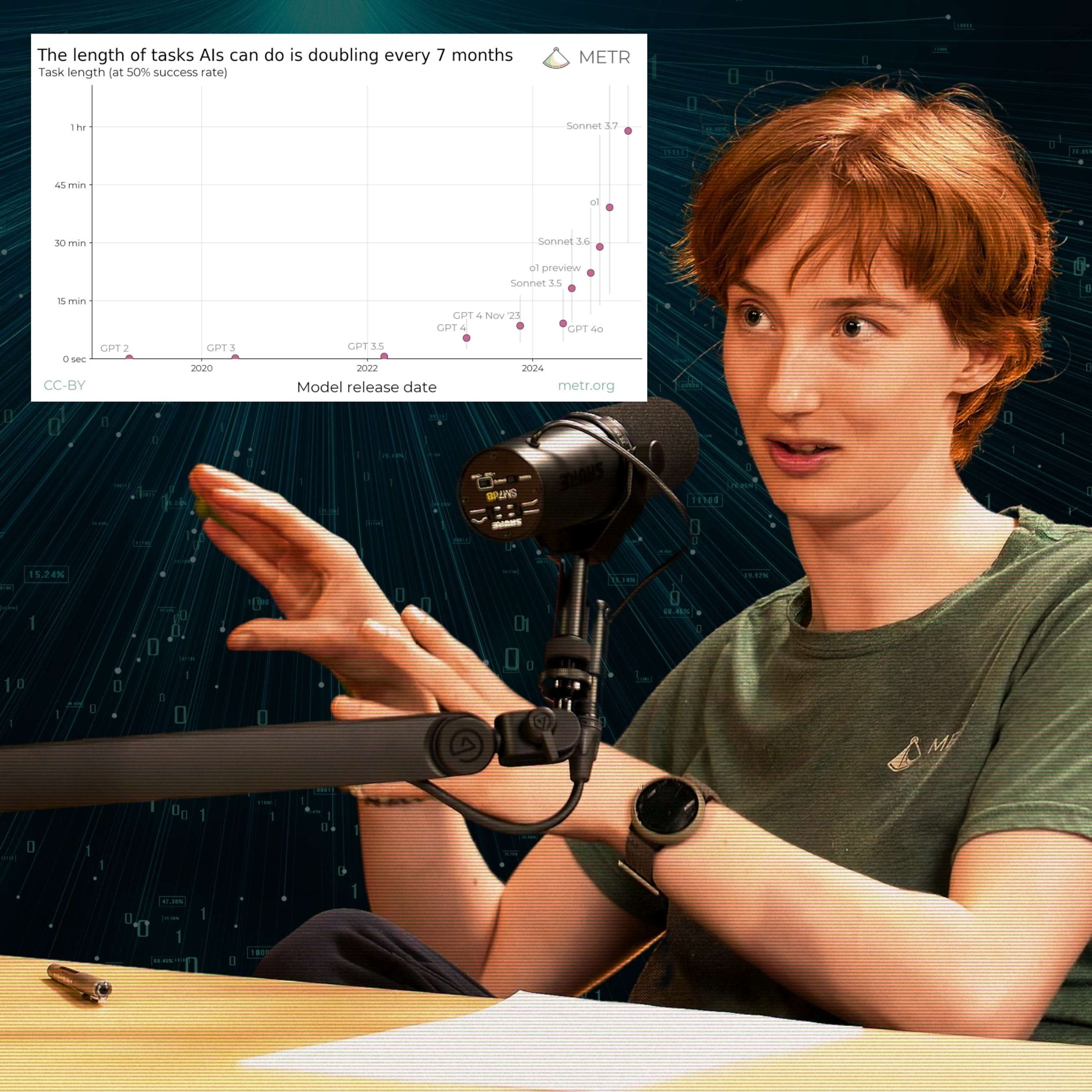

Highlights: #217 – Beth Barnes on the most important graph in AI right now — and the 7-month rule that governs its progress

80k After Hours •

AI models today have a 50% chance of successfully completing a task that would take an expert human one hour. Seven months ago, that number was roughly 30 minutes — and seven months before that, 15 minutes.

These are substantial, multi-step tasks requiring sustained focus: building web applications, conducting machine learning research, or solving complex programming challenges.

Today’s guest, Beth Barnes, is CEO of METR (Model Evaluation & Threat Research) — the leading organisation measuring these capabilities.

These highlights are from episode #217 of The 80,000 Hours Podcast: Beth Barnes on the most important graph in AI right now — and the 7-month rule that governs its progress, and include:

- Can we see AI scheming in the chain of thought? (00:00:34)

- We have to test model honesty even before they're used inside AI companies (00:05:48)

- It's essential to thoroughly test relevant real-world tasks (00:10:13)

- Recursively self-improving AI might even be here in two years — which is alarming (00:16:09)

- Do we need external auditors doing AI safety tests, not just the companies themselves? (00:21:55)

- A case against safety-focused people working at frontier AI companies (00:29:30)

- Open-weighting models is often good, and Beth has changed her attitude about it (00:34:57)

These aren't necessarily the most important or even most entertaining parts of the interview — so if you enjoy this, we strongly recommend checking out the full episode!

And if you're finding these highlights episodes valuable, please let us know by emailing podcast@80000hours.org.

Highlights put together by Ben Cordell, Milo McGuire, and Dominic Armstrong

Humanizing Your AI Communications Strategy with Edelman Global Chair of AI Brian Buchwald

Shift AI Podcast •

In this episode of the Shift AI Podcast, Boaz Ashkenazy welcomes Brian Buchwald, who leads AI strategy and product development at Edelman, the world's largest communications firm. With his impressive background spanning investment banking, ad tech, digital media, and entrepreneurship, Buchwald offers a unique perspective on how AI is transforming both internal operations and client services at global scale.

Discover how Edelman is implementing AI across its 7,000-employee organization and for its prestigious client roster. Buchwald shares fascinating insights on the balance between automation and human expertise, the evolution of trust in the AI era, and how communications professionals are quantifying their impact. Whether you're interested in AI implementation at enterprise scale or the future of knowledge work, this episode reveals how "curated automation" is redefining an entire industry while keeping the human element at its core.

Chapters:

[00:00] Introduction to Brian Buchwald and Edelman

[03:09] Brian's Career Journey from Ad Tech to Communications

[05:35] Four Lenses of AI Implementation at Edelman

[06:59] Trust Quantification and Research Transformation

[10:17] Trust and AI: The Public Perception

[13:01] Three-Tiered Approach to Client Transformation

[17:55] Measuring Business Value in Communications

[21:48] The Human-Machine Partnership in Creative Work

[24:11] Mentors and Strategic Influences

[29:11] The Future of Work: Curated Automation

Connect with Brian Buchwald

LinkedIn: https://www.linkedin.com/in/brian-buchwald-0447591/

Email: brian.buchwald@edelman.com

Connect with Boaz Ashkenazy

LinkedIn: https://www.linkedin.com/in/boazashkenazy

Email: shift@augmentedailabs.com

The Shift AI Podcast is syndicated by GeekWire, and we are grateful to have the show sponsored by Augmented AI Labs. Our theme music was created by Dave Angel

Follow, Listen, and Subscribe

Spotify | Apple Podcast | Youtube

You’ve got 99 problems but data shouldn’t be one

The Stack Overflow Podcast •

Tobiko Data is creating a new standard in data transformation with their Cloud and SQL integrations. You can keep up with their work by joining their Slack community.

Connect with Toby on LinkedIn.

Connect with Iaroslav on LinkedIn.

Congrats to Stellar Answer badge winner Christian C. Salvadó, whose answer to What's a quick way to comment/uncomment lines in Vim? was saved by over 100 users.

Unlocking Enterprise Efficiency Through AI Orchestration - Kevin Kiley of Airia

The AI in Business Podcast •

Today’s guest is Kevin Kiley, President of Airia. With extensive experience helping large enterprises implement secure and scalable AI systems, Kevin joins Emerj Editorial Director Matthew DeMello to explore how agentic AI is reshaping enterprise workflows across industries like financial services. He explains how these systems differ from traditional AI by enabling autonomous action across connected environments—introducing both new efficiencies and new risks. Kevin breaks down a phased roadmap for adoption, from quick wins to broader orchestration, and shares key lessons from working with organizations navigating complex compliance, data governance, and access control challenges. He also highlights the growing importance of real-time safeguards and defensive security strategies as AI capabilities — and threats — continue to evolve. This episode is sponsored by Airia. Want to share your AI adoption story with executive peers? Click emerj.com/expert2 for more information and to be a potential future guest on the ‘AI in Business’ podcast!

Windows killed the Blue Screen of Death

TechCrunch Daily Crunch •

Bill Gates-backed AirLoom begins building its first power plant

TechCrunch Industry News •

Managing Deployments and DevOps at Scale with Tom Elliott

The Scaling Tech Podcast •

Thu. 06/26 – Conflicting AI Legal Rulings

Techmeme Ride Home •

The legal rulings on AI are finally coming in. The problem is, they’re contradictory, so we’re not getting any legal clarity yet. Creative Commons but for AI training data. Is DeepSeek’s R2 model being stymied by lack of access to Nvidia chips? And another deep look at the question of: is AI taking jobs at tech companies, right now?

Links:

- Microsoft sued by authors over use of books in AI training (Reuters)

- Trump Mobile reiterates claims that new phones are 'made in America' (USAToday)

- Creative Commons debuts CC signals, a framework for an open AI ecosystem (TechCrunch)

- OpenAI, Microsoft Rift Hinges on How Smart AI Can Get (WSJ)

- DeepSeek’s Progress Stalled by U.S. Export Controls (The Information)

- Salesforce CEO Says 30% of Internal Work Is Being Handled by AI (Bloomberg)

- AI Killed My Job: Tech workers (Blood In The Machine)

See Privacy Policy at https://art19.com/privacy and California Privacy Notice at https://art19.com/privacy#do-not-sell-my-info.

A Big Ruling on LLM Training and Midsummer Mail on NBA Salaries in Tech, Starting from Scratch in 2025, and More

Sharp Tech with Ben Thompson •

Optimizing Data Workflows with Emily Riederer | Season 6 Episode 8

Casual Inference •

- Emily: @emilyriederer.bsky.social

- Ellie: @epiellie.bsky.social

- Lucy: @lucystats.bsky.social

Bucky Moore: The Next Decade of AI Infrastructure

Generative Now | AI Builders on Creating the Future •

This week, Lightspeed Partner Mike Mignano sits down with his colleague Bucky Moore, a fellow partner at Lightspeed to explore the rapidly shifting landscape of AI and infrastructure. They unpack the evolution from hardware to cloud to AI-native architectures, the growing momentum behind open-source models, and the rise of AI agents and reinforcement learning environments. Bucky also shares how his early days at Cisco shaped his bottom-up view of enterprise software, and why embracing the new is the key to spotting trillion-dollar opportunities.

Episode Chapters:

(00:00) Introduction to the Conversation

(00:38) Bucky Moore's Background and Early Career

(01:39) Insights from Cisco: Old World Meets New World

(03:54) Transition to Venture Capital

(08:15) The Evolution of Infrastructure Investment

(15:50) Impact of AI on Infrastructure

(24:37) Training AI Agents: Challenges and Innovations

(25:07) The Future of Reinforcement Learning Environments

(25:51) Infrastructure for AI Agents

(26:49) Emerging Opportunities in AI Infrastructure

(28:58) The Impact of Salesforce's Data Policies

(33:00) The Evolution of AI Compute

(39:47) The Role of New AI Architectures

(42:47) The Future of Venture Capital in AI

(46:44) Predicting the Next Trillion-Dollar AI Companies

Stay in touch:

LinkedIn: https://www.linkedin.com/company/lightspeed-venture-partners/

Instagram: https://www.instagram.com/lightspeedventurepartners/

Subscribe on your favorite podcast app: generativenow.co

Email: generativenow@lsvp.com

The content here does not constitute tax, legal, business or investment advice or an offer to provide such advice, should not be construed as advocating the purchase or sale of any security or investment or a recommendation of any company, and is not an offer, or solicitation of an offer, for the purchase or sale of any security or investment product. For more details please see lsvp.com/legal.

The AI infrastructure stack with Jennifer Li, a16z

Complex Systems with Patrick McKenzie (patio11) •

In this episode, Patrick McKenzie (@patio11) is joined by Jennifer Li, a general partner at a16z investing in enterprise, infrastructure and AI. Jennifer breaks down how AI workloads are creating new demands on everything from inference pipelines to observability systems, explaining why we're seeing a bifurcation between language models and diffusion models at the infrastructure level. They explore emerging categories like reinforcement learning environments that help train agents, the evolution of web scraping for agentic workflows, and why Jennifer believes the API economy is about to experience another boom as agents become the primary consumers of software interfaces.

–

Full transcript:

www.complexsystemspodcast.com/the-ai-infrastructure-stack-with-jennifer-li-a16z/

–

Sponsor: Vanta

Vanta automates security compliance and builds trust, helping companies streamline ISO, SOC 2, and AI framework certifications. Learn more at https://vanta.com/complex

–

Links:

- Jennifer Li’s writing at a16z https://a16z.com/author/jennifer-li/

–

Timestamps:

(00:00) Intro

(00:55) The AI shift and infrastructure

(02:24) Diving into middleware and AI models

(04:23) Challenges in AI infrastructure

(07:07) Real-world applications and optimizations

(15:15) Sponsor: Vanta

(16:38) Real-world applications and optimizations (cont’d)

(19:05) Reinforcement learning and synthetic environments

(23:05) The future of SaaS and AI integration

(26:02) Observability and self-healing systems

(32:49) Web scraping and automation

(37:29) API economy and agent interactions

(44:47) Wrap

Satya Nadella: Microsoft’s AI Bets, Hyperscaling, Quantum Computing Breakthroughs

Y Combinator Startup Podcast •

A fireside with Satya Nadella on June 17, 2025 at AI Startup School in San Francisco.Satya Nadella started at Microsoft in 1992 as an engineer. Three decades later, he’s now Chairman & CEO, navigating the company through one of the most profound technological shifts yet: the rise of AI.In this conversation, he shares how Microsoft is thinking about this moment— from the infrastructure needed to train frontier models, to the social permission required to use that compute. He draws parallels to the early PC and internet eras, breaks down what makes a great team, and reflects on what he’d build if he were starting his career today.

#213 - Midjourney video, Gemini 2.5 Flash-Lite, LiveCodeBench Pro

Last Week in AI •

Our 213nd episode with a summary and discussion of last week's big AI news! Recorded on 06/21/2025

Hosted by Andrey Kurenkov and Jeremie Harris. Feel free to email us your questions and feedback at contact@lastweekinai.com and/or hello@gladstone.ai

Read out our text newsletter and comment on the podcast at https://lastweekin.ai/.

In this episode:

- Midjourney launches its first AI video generation model, moving from text-to-image to video with a subscription model offering up to 21-second clips, highlighting the affordability and growing capabilities in AI video generation.

- Google's Gemini AI family updates include high-efficiency models for cost-effective workloads, and new enhancements in Google's search function now allow for voice interactions.

- The introduction of two new benchmarks, Live Code Bench Pro and Abstention Bench, aiming to test and improve the problem-solving and abstention capabilities of reasoning models, revealing current limitations.

- OpenAI wins a $200 million US defense contract to support various aspects of the Department of Defense, reflecting growing collaborations between tech companies and government for AI applications.

Timestamps + Links:

- (00:00:10) Intro / Banter

- (00:01:32) News Preview

- Tools & Apps

- (00:02:12) Midjourney launches its first AI video generation model, V1

- (00:05:52) Google’s Gemini AI family updated with stable 2.5 Pro, super-efficient 2.5 Flash-Lite

- (00:07:59) Google’s AI Mode can now have back-and-forth voice conversations

- (00:10:13) YouTube to Add Google’s Veo 3 to Shorts in Move That Could Turbocharge AI on the Video Platform

- Applications & Business

- (00:11:10) The ‘OpenAI Files’ will help you understand how Sam Altman’s company works

- (00:12:29) OpenAI drops Scale AI as a data provider following Meta deal

- (00:13:28) Amazon’s Zoox opens its first major robotaxi production facility

- Projects & Open Source

- (00:15:20) LiveCodeBench Pro: How Do Olympiad Medalists Judge LLMs in Competitive Programming?

- (00:19:45) AbstentionBench: Reasoning LLMs Fail on Unanswerable Questions

- (00:22:49) MiniMax-M1: Scaling Test-Time Compute Efficiently with Lightning Attention

- Research & Advancements

- Policy & Safety

See Why GenAI Workloads Are Breaking Observability with Wayne Segar

Screaming in the Cloud •

What happens when you try to monitor something fundamentally unpredictable? In this featured guest episode, Wayne Segar from Dynatrace joins Corey Quinn to tackle the messy reality of observing AI workloads in enterprise environments. They explore why traditional monitoring breaks down with non-deterministic AI systems, how AI Centers of Excellence are helping overcome compliance roadblocks, and why “human in the loop” beats full automation in most real-world scenarios.

From Cursor’s AI-driven customer service fail to why enterprises are consolidating from 15+ observability vendors, this conversation dives into the gap between AI hype and operational reality, and why the companies not shouting the loudest about AI might be the ones actually using it best.

Show Highlights

(00:00) - Cold Open

(00:48) – Introductions and what Dynatrace actually does

(03:28) – Who Dynatrace serves

(04:55) – Why AI isn't prominently featured on Dynatrace's homepage

(05:41) – How Dynatrace built AI into its platform 10 years ago

(07:32) – Observability for GenAI workloads and their complexity

(08:00) – Why AI workloads are "non-deterministic" and what that means for monitoring

(12:00) – When AI goes wrong

(13:35) – “Human in the loop”: Why the smartest companies keep people in control

(16:00) – How AI Centers of Excellence are solving the compliance bottleneck

(18:00) – Are enterprises too paranoid about their data?

(21:00) – Why startups can innovate faster than enterprises

(26:00) – The "multi-function printer problem" plaguing observability platforms

(29:00) – Why you rarely hear customers complain about Dynatrace

(31:28) – Free trials and playground environments

About Wayne Segar

Wayne Segar is Director of Global Field CTOs at Dynatrace and part of the Global Center of Excellence where he focuses on cutting-edge cloud technologies and enabling the adoption of Dynatrace at large enterprise customers. Prior to joining Dynatrace, Wayne was a Dynatrace customer where he was responsible for performance and customer experience at a large financial institution.

Links

Dynatrace website: https://dynatrace.com

Dynatrace free trial: https://dynatrace.com/trial

Dynatrace AI observability: https://dynatrace.com/platform/artificial-intelligence/

Wayne Segar on LinkedIn: https://www.linkedin.com/in/wayne-segar/

Sponsor

Dynatrace: http://www.dynatrace.com

Building Production-Grade RAG at Scale

The Data Exchange with Ben Lorica •

Douwe Kiela, Founder and CEO of Contextual AI, discusses why RAG isn’t obsolete despite massive context windows, explaining how RAG 2.0 represents a fundamental shift to treating retrieval-augmented generation as an end-to-end trainable system.

Subscribe to the Gradient Flow Newsletter 📩 https://gradientflow.substack.com/

Subscribe: Apple · Spotify · Overcast · Pocket Casts · AntennaPod · Podcast Addict · Amazon · RSS.

Detailed show notes - with links to many references - can be found on The Data Exchange web site.

Novoloop is making tons of upcycled plastic

TechCrunch Industry News •

Google unveils Gemini CLI

TechCrunch Daily Crunch •

Databricks, Perplexity co-founder pledges $100M on new fund for AI researchers

TechCrunch Startup News •

How a data processing problem at Lyft became the basis for Eventual

TechCrunch Industry News •

#511: From Notebooks to Production Data Science Systems

Talk Python To Me •

Episode sponsors

Agntcy

Sentry Error Monitoring, Code TALKPYTHON

Talk Python Courses

Links from the show

Catherine Nelson LinkedIn Profile: linkedin.com

Catherine Nelson Bluesky Profile: bsky.app

Enter to win the book: forms.google.com

Going From Notebooks to Scalable Systems - PyCon US 2025: us.pycon.org

Going From Notebooks to Scalable Systems - Catherine Nelson – YouTube: youtube.com

From Notebooks to Scalable Systems Code Repository: github.com

Building Machine Learning Pipelines Book: oreilly.com

Software Engineering for Data Scientists Book: oreilly.com

Jupytext - Jupyter Notebooks as Markdown Documents: github.com

Jupyter nbconvert - Notebook Conversion Tool: github.com

Awesome MLOps - Curated List: github.com

Watch this episode on YouTube: youtube.com

Episode #511 deep-dive: talkpython.fm/511

Episode transcripts: talkpython.fm

--- Stay in touch with us ---

Subscribe to Talk Python on YouTube: youtube.com

Talk Python on Bluesky: @talkpython.fm at bsky.app

Talk Python on Mastodon: talkpython

Michael on Bluesky: @mkennedy.codes at bsky.app

Michael on Mastodon: mkennedy

Episode 46: Software Composition Is the New Vibe Coding

Vanishing Gradients •

D2DO276: MCP: Capable, Insecure, and On Your Network Today

Day Two DevOps •

Wed. 06/25 – Never Wear A Suit In Tech

Techmeme Ride Home •

AI is transforming job search on both sides of the equation. A first court ruling on using copyrighted books to train AI. New AI releases from Google devs will want to know about. How your kids 3rd grade teacher is using AI. And why did Apple push an ad to everybody?

Sponsors:

Links:

- Employers Are Buried in A.I.-Generated Résumés (NYTimes)

- CareerBuilder + Monster to Sell Businesses in Bankruptcy (WSJ)

- Exclusive: Uber and Palantir alums raise $35M to disrupt corporate recruitment with AI (Fortune)

- Anthropic wins a major fair use victory for AI — but it’s still in trouble for stealing books (The Verge)

- Google unveils Gemini CLI, an open-source AI tool for terminals (TechCrunch)

- How ChatGPT and other AI tools are changing the teaching profession (AP)

- iPhone customers upset by Apple Wallet ad pushing ‘F1’ movie (TechCrunch)

See Privacy Policy at https://art19.com/privacy and California Privacy Notice at https://art19.com/privacy#do-not-sell-my-info.

859: BAML: The Programming Language for AI, with Vaibhav Gupta

Super Data Science: ML & AI Podcast with Jon Krohn •

RAG Benchmarks with Nandan Thakur - Weaviate Podcast #124!

Weaviate Podcast •

Nandan Thakur is a Ph.D. student at the University of Waterloo! Nandan has worked on many of the most impactful works in Retrieval-Augmented Generation (RAG) and Information Retrieval. His work ranges from benchmarks such as BEIR, MIRACLE, TREC, and FreshStack, to improving the training of embedding models and re-rankings, and more!

(Preview) The PRC and the Past Two Weeks in Iran; Ten Speeches in Taiwan; London Framework Faltering?; All Eyes on the EU

Sharp China with Bill Bishop •

Episode 42: Learning, Teaching, and Building in the Age of AI

Vanishing Gradients •

Chinar Movsisyan: How to Deliver End-to-End AI Solutions

ConTejas Code •

Links

- Codecrafters (sponsor): https://tej.as/codecrafters

- Feedback Intelligence: https://www.feedbackintelligence.ai/

- Chinar on X: https://x.com/movsisyanchinar

Summary

In this podcast episode, we talk to Chinar Movsisyan, the CEO and founder of Feedback Intelligence. They discuss Chinar's extensive background in AI, including her experience in machine learning and computer vision. We discuss the challenges faced in bridging the gap between technical and non-technical stakeholders, the practical applications of feedback intelligence in enhancing user experience, and the importance of identifying failure modes. The discussion also covers the role of LLMs in the architecture of Feedback Intelligence, the company's current stage, and how it aims to make feedback actionable for businesses.

Chapters

00:00 Chinar Movsisyan

02:08 Introduction to Feedback Intelligence

03:23 Chinar Movsisyan's Background and Expertise

06:33 Understanding AI Engineer vs. GenAI Engineer

09:08 The Lifecycle of Building an AI Application

13:27 Data Collection and Cleaning Challenges

16:20 Training the AI Model: Process and Techniques

24:48 Deploying and Monitoring AI Models in Production

27:55 The Birth of Feedback Intelligence

31:58 Understanding Feedback Intelligence

33:26 Practical Applications of Feedback Intelligence

42:13 Identifying Failure Modes

45:58 The Role of LLMs in Feedback Intelligence

51:25 Company Stage and Future Directions

57:24 Making Feedback Actionable

01:01:30 Streamlining Processes with Automation

01:03:18 The Journey of a First-Time Founder

01:05:48 Wearing Many Hats: The Founder Experience

01:08:22 Prioritizing Features in Early Startups

01:13:09 Learning from Customer Interactions

01:16:38 The Importance of Problem-Solving

01:21:51 Handling Rejection and Staying Motivated

01:27:43 Marketing Challenges for Founders

01:29:23 Future Plans and Scaling Strategies

Hosted on Acast. See acast.com/privacy for more information.

Codename Goose - Your Next Open Source AI Agent

The Square Developer Podcast •

Richard Moot: Hello and welcome to another episode of the Square Developer Podcast. I'm your host, Richard Moot, head of developer relations here at Square. And today I'm joined by my fellow developer relations engineer, Rizel, who's over working on Block Open Source. Hi Rizel, welcome to the podcast.

Rizel Scarlett: Hey, Richard. Thanks for having me. And I know it's so cool. We're like coworkers, but on different teams

Richard Moot: And you get to work on some of the, I'll admit I'm a little bit jealous. You get to work on some of the cool open source stuff, but I still get to poke around in there occasionally. But today we wanted to talk about one of our most recent releases is Goose, and I would like you to do the honors of, give us the quick pitch. What is Goose?

Rizel Scarlett: Goose is an on machine AI agent and it's open source. So when I say on machine, it's local. Unlike a lot of other AI tools that you use via the cloud, you have everything stored on your computer, private, you have control over the data, and you get to interact with different lms. You can choose whichever you want, whether it's GPT, sonnet, 3.5, whatever you prefer, you get to bring it.

Richard Moot: Awesome. And so I'm going to hopefully give a little bit more because I want to just kind of clarify for Square developers who might be coming in, they're like, they're just building other APIs, SDKs, trying to extend stuff for square sellers. So when we're talking about an agent, an agent, I always end up thinking the matrix, the agents and the matrix. And from what I understand, it's not too far off. You give it instructions and it will actually go and do things on your machine for you write two files, edit files, run commands. It's almost like doing things that a person could do on your computer for you.

Rizel Scarlett: Yes, exactly. That's a really good description. It doesn't just edit code for you. It can control your system. So I had it dimmed, the lights on my computer open different applications. You can really just automate anything even if you didn't know how to code.

Richard Moot: Yeah, I mean that's one of the things that I didn't even really think about when I first tried Goose. So one of the fun benefits of working here at Block is that I got to have fun with it before it actually went live. And one thing that I didn't really think about until I tried the desktop client and I forgot to allow the plug, there's two different ways you can interact with it. There's the CLI and the terminal, and then there's a desktop client, which I think right now works on Mac os.

Rizel Scarlett: Yes,

Richard Moot: I know there's big requests and to have it work in more than just Windows.

Rizel Scarlett: Yeah. Yeah. Right now, I mean we do have what I think is a working version of Windows, but the experience for the build time is not great. So we're still working through that.

Richard Moot: Yeah, well, having my own wrestling with working with the Windows sub Linux, I only really think of it as WSL. I've had so many headaches of trying to deal with networking and connecting and when do I need to switch to the power show versus a terminal, and it's all the reason I end up falling back to doing all of my development on my Mac.

Rizel Scarlett: Yeah. I haven't used the Windows computer since I was an IT support person. I don't even know what the new developments are now.

Richard Moot: Yeah, I mean I recently got burned by that where I didn't realize that in order to do certain virtualization stuff, you had to have a specific version of Windows, like some professional version, and then that enabled virtualization to run a VM of something interesting.

I think since then they've baked in the Windows sub Linux thing, which is basically just running Ubuntu in a virtualization for you. But that was an eyeopener, but thankfully Microsoft's working on fixing these things, but we digress. So coming back to Goose and what is it that most people have that you've sort of seen from the community as they've been starting to try it out and use Goose?

Rizel Scarlett: Yeah, I mean I just see people, well, a lot of it is mainly developers. That's the larger side of just using it to automate a lot of the tasks that they are doing. Maybe setting up, what am I trying to say, the boilerplate for their code or just sometimes other different things. I see people wanting to build local models and just in general or doing things with their kids, but I've also seen people doing silly experiments. This is where I find a lot of fun where people are having Goose talk to Goose or having a team of different, I guess geese, a team of agents and they're basically running a whole bunch of stuff. So they had one Goose be the PM and it was instructing all the engineer agents to perform different tasks. So it's a varied amount of things, but a lot of people are just trying to make their lives easier and have Goose do the mundane task in the background while they do the creative things. I've just been doing fun silly stuff. Like I had Goose play tic-tac-toe with me just for fun. I just wanted to see if it could do that and that was cool. Yeah.

Richard Moot: Have you beat it yet?

Rizel Scarlett: Every time I'm disappointed.

Richard Moot: You think it'd be way more advanced? I mean tech to can kind of, if I'm not mistaken, I think based on who goes first, it can be a determined game as long as you play with perfect strategy.

Rizel Scarlett: Yeah, I told it to play competitively. I'm still working on the perfect prompt. You always let me win Goose what's going on.

Richard Moot: Maybe that's part of the underlying LLMs is that they want to be helpful and so they think they're being helpful by letting you win, otherwise you wouldn't have fun.

Rizel Scarlett: That's true.

Richard Moot: Well, one of the things I was very fascinated by when first trying out the desktop client versus the CLI, because I habitually used the CLI version, but when I first opened up the desktop client, I had asked, what is it that you can do? And one of the things that it suggested that never even occurred to me was using Apple Scripts to run certain automations on your system. And I immediately just went, okay, can you organize my downloads folder and put everything? And it just immediately put everything in organized folders. And that's something I used to, I mean years ago, write my own quick little scripts to be like, oh, I need to move all these CSVs into someplace and PDFs. And it just immediately did it for me and it was just, that was amazing because now I can actually find where the certain things are.

Rizel Scarlett: That's so awesome. Yeah, I think you might've been using the computer controller extension, and that might be my favorite so far just because of, oh my gosh, it could actually, it's not just writing code for me. I'm like, okay, cool. There's other stuff that can do that Cursor does that as well, but it can tap into my computer system if I give it permission and move things around. I did a computer controller extension tutorial and I was just making it do silly stuff. Like I mentioned, it dimmed my computer screen. It opened up Safari and found classical music to play it, did some research on AI agents for me and put it in A CSV and then it turned back on the lights. It's so cool. I can just tell it, go do my own work for me and I'll it back.

Richard Moot: Yeah, that's great. And so you touched on something that I think is kind of an interesting part about it, and I feel like I want to come back to the part to really emphasize GOOSE is an open source project, and so it allows you to attach all of those various LLMs to sort of power the experience. But what you just touched on there is the extensions. So the way that it can do these things, could you tell us a little bit about what are extensions and how are they used by either Goose or the LLM? What is the relationship there?

Rizel Scarlett: Yeah, so extensions are basically, I guess you can think of it as extending it to different applications or different use cases. And we're doing that through a protocol called the Model Context Protocol, which Anthropic and us have been partnering on. And basically it allows any AI agent to kind of have access to different data. So for example, there's a Figma MCP or a model context protocol, and you can connect GOOSE to that MCP and tell it, Hey, here's some designs that I have, and Goose will be able to look at those and copy it rather than when you're maybe working with something like chat GBT, you have to go and give it context and be like, Hey, chat GBT, I'm working on this. Here's how this goes. And it takes up a lot of time. It'll just jump right in. And like you were saying, it's open source, so anybody can make MCP, you can connect it to any MCP out there that, I mean, some of them have to be honest, some CPS that are out there since it's open source, they don't all work, but the ones that do, you can connect it to Goose.

Richard Moot: Yeah. And so that's kind of like what you were originally talking about, the computer controller one.

Richard Moot: I'm going to hopefully describe this in a way that can make this visual for those that are listening in. But when you're using GOOSE in the terminal, when you first ever install it, it'll run you through a configuration of, Hey, it's basically setting up your profile and it says, which LLM do you want to connect to? And then you can kind of select from there and then it'll say, give me your credentials. And then after that you can get the option to, well actually maybe I'm jumping the gun here. I think it just gets you through storing that. And then you can have the option of once Goose is configured, you can toggle on certain extensions, extensions that are included, and then there's a process to actually go find these other ones that are published elsewhere and then add them in, right?

Rizel Scarlett: Yes, that's correct. We have your built-in extensions, like the developer extension, computer controller memory, and then you have the option to reach out to other extensions or even build your own custom extension and plug it in as well.

Richard Moot: Gotcha. And so the one I know that is the key one that's included with GOOSE is the developer extension. That's what does all the basic developer actions that you would think of, and then computer controller, that's kind of the one for doing more. Maybe tell me how is computer controller different than developer?

Rizel Scarlett: Yeah, so developer extension, it has the ability to run, shell command Shells scripts, so it'll go ahead and you say, create this file. It'll say touch create this file. It'll add the code for you in the places that it needs to. Whereas the computer controller, the intention of that is that it's supposed to be able to scrape the web, do different web interactions, be able to control your system, and then this is all automating things or even do automation scripts like you had mentioned before. These are all automating things for people who may not feel as comfortable coding, but they want to automate things within their system. That's the intention of the computer controller.

Richard Moot: Gotcha. And I'm curious, as this has been out there and having two different versions, I don't really want to say two different versions, two different ways of interacting with Goose with the desktop and the CLI, the desktop is really great for those that might not be more comfortable opening up a terminal. Have you seen folks coming in who are maybe less technical, who've been trying to actually use it through this way?

Richard Moot: Just curious all the various types of people that have been coming in and adopting or playing around with Goose.

Rizel Scarlett: Yeah. Well first side note, even though I'm comfortable with the terminal, I like using the desktop. I just think it looks more visually appealing for me. But I have seen people in Discord, I think there was a set of health professionals that they were part of a hackathon and they were using GOOSE to build whatever their submissions were. I don't know exactly what, but I thought that was interesting that they're going to build tools and submit to a hackathon even though they're not solely software engineers. So that's one example.

Richard Moot: Interesting. So it's been really interesting seeing all of the different ways that people have building on it. And I mean it's been pretty exciting seeing how people have really started to just start using it. One of the things I thought was interesting was it seemed like initially some people just didn't quite understand, and I'm sure there's just work to be done in general, not just for us, but for people trying to use agents that I think a lot of people have assumed initially like, oh, where's the LLM? Why is there no thing bundled with this? I feel like I can't do anything. And we're like, no, you have to connect it to something else. But the one that got me the most interested was trying to get it to talk to a local LLM. And so I've tinkered with this over the past couple of weekends of running a llama, getting a model running. But I will admit that I hit my own endpoint where I was like, okay, I have an LLM running, but it doesn't really work with the tool calling.

I think that's something maybe we talk a little bit, what is it tool calling is like that thing that how it uses the extensions. But maybe you can tell us a little bit about what is tool calling and why is it so important?

Rizel Scarlett: Yeah, I mean a lot of things you said that I want to touch on. First off agents, I think from not understanding how Goose will work, I think agents are still a fairly new concept and everybody is saying, oh, this is what an agent is, and they all have their different definitions. So when I first used Goose as well, I was a little bit confused. I was like, what is this supposed to do? I think similarly when Devin came out, people were like, this is not working how I thought it would. So that just happens. But yes, open source using Goose with an open source LLM is so powerful because Goose is an open source local AI agent, and then you have the local LLMs that you can leverage it with. So you can own your data and you don't even need internet to have the LLMs running.

Rizel Scarlett: But it is difficult. And like you said, tool calling. I am excited about this. I just came off of livestream with an engineer from alama. First off, the way he described tool calling was interesting. He said, it's not how I thought of it, but he was saying it's kind of how the LLM learns what it should or can do. So it's kind of like, oh, I have these set of tools here. Which one should I use for what I'm going to do? So let's say you told somebody I want to look up, or I want to go on a flight to, I don't know, Istanbul, I don't know why I picked that. What flights are available, how long will it take me? So then

Rizel Scarlett: An agent will be like, oh, tools do I have? And it might say, oh, I have a find flights tool and I have a MAP tool and I have this. So in order to find the flight, it might use that flights tool and in order to figure out the distance, it might use the MAPS tool or something like that. So that's kind of how it would work and it, I think it refines the results that it would have rather than looking at all these different things, it's like, okay, I'm going to use this particular tool and get this particular output. I learned a lot about open source models working with Goose or any agent, you have to, it's a lot of different prompting tips. First off, it's best probably to ask the open source LLM what access or what tools do I have access to? Because Open Source L LMS are much smaller, so they have a smaller context window and they're not able to interact with an agent like the cloud ones. They have so much more larger content with those, so they're able to take in more memory and stuff like that. So it's like I only have this amount, so let's get to what we need to do. Show me what tools are available. I'll grab that particular tool that's needed. And then another suggestion for when building an agent, and I think Goose will probably go in this direction to help improve the experience of working with open source. LLMs is having structured output. So the structured output would tell it kind of what it can and can't do and how the format of it would be printed out.

Richard Moot: Interesting.

Rizel Scarlett: I know I said a lot.

Richard Moot: No, no, no, that was great because it had me wondering with certain, when I started messing with one of the open source models and then I was trying to use it, I think the open source model I found was from somebody within Block who actually tried to fine tune a version of Deep Seek to be like, oh yeah, this one will work with tool calling. But then I think I was realizing I still needed probably an extension for it to actually make use of it because, and I think that's the part where maybe I misunderstood how these things work, but I'm sure that there's things that Goose does that it maybe tells the LLM almost pretext that is sent in the context. So before you even write your prompt, it has things that it will sort of give to be like, Hey, you have tools available to you or there's these tools. And so you might not see that in the terminal or in the desktop, but it's actually sort of adding these things at the beginning or maybe the end of the context to say, Hey, here's some tools available to you. Make sure that you use them. I'm oversimplifying, I'm sure, but that's kind of how I'm guessing that that might work.

Rizel Scarlett: That's how I seen it. So I looked at the logs because I wanted to really demo. I had no clue coming back from maternity leave that there was this little not necessarily working or trying to say obstacle, a little obstacle to work with open source LLMs and the agent. So I was like, oh, I'm ready to go. I'm going to go ahead and demo this live. And I realized, oh my gosh, it doesn't work perfectly. So I was looking at the logs and it does have a system prompt in the beginning where I didn't use deep seek like you did. I used Quinn 2.5 and it'll say in the beginning, you're a helpful assistant, you have access to computer controller and developer extension, and you can do this, this, and this. I think another limitation is our hardware as well. So even though it's on a local device, and I mean it's supposed to work on a local device, our local devices might not have enough RAM or memory. I have a 64 gigabyte, but the person that came on the live stream with me, he had 128, so that worked much better. So that might've been a limitation for you as well. And even though the system prompt already told it what extensions it would have, we both had a better result when we started off the conversation saying, Hey, what tools do you have access to? And it probably referred to the system prompt and then went ahead and printed it out to us.

Richard Moot: Yeah, yeah. And when I was tinkering with this, I actually was putting, I took an old gaming laptop basically set it up with, I converted it from Windows to Linux and it works reasonably well. It's still a little bit too slow for what I would actually want to be using it. So I have my regular gaming computer that I've actually, so when I want to mess with this, I actually just run a alarm on that when it's on, and then I use it as sort of a remote server and it's usable at that point. I think tokens don't fly through with the cloud LLMs. I mean it's still kind of slowish, but it's usable. And I think it's really fun to try out the local LLM stuff just, I mean as a developer, it gives me this mild peace of mind of my data's not going anywhere, so it feels safer somehow.

Rizel Scarlett: Yeah, you're such a tinkerer. I love that.

Richard Moot: Oh, I tinker with way too many things, network configurations, running clusters locally on my home lab, all stuff that I don't think I've ever used professionally, but I just love learning about this stuff.

Rizel Scarlett: I love that. I love that.

Richard Moot: So that kind of leads me one other thing that I'm interested in and I want to clarify. Not going to try to go into the realm of tips about using LLMs in augmenting our development workflows. And I think we're both in a similar camp of being in Devereux. It's really fun to just be like, I'm going to use this to start a new project or work in a language that I'm not usually familiar with and maybe see what I can build. I'm curious in terms of unprofessional tips, just things that you're sort of learning intuitively as you interact with it, how has it changed for you when you first started working with LLMs with doing software development to now? Have you ever noticed how you approach things a little bit differently?

Rizel Scarlett: That's a really good question. I haven't thought about it. I know when I, let me think because when I started using, my first experience with LLMs was like GitHub copilot and I made this whole playbook for people to use. I was like, make sure you have detailed examples and stuff like that, but how has it changed now?

Richard Moot: I'll give you an example. So to maybe help get your creative juices flowing on it. I know it's kind of coming out of left field, but when I first started using LLMs, I would just be like, Hey, can you build me this particular function? I think my first interaction was probably similar when I first used GitHub copilot and I was just doing tab completions and be like, I thought it was really cool that I could write a comment and describing the function that I want, and then I would start to write the function and then it would complete it out, and then I'd maybe have to edit a few things. And then once, I think it was when I first started using Goose is the first time I really tried one shotting things to be like build me an entire auth service for this app. And then I have now kind of swung back the other way a little bit where I tend to want to do a little bit more prompting when I want to do more of those one shots.

Rizel Scarlett: Interesting.

Richard Moot: But I've found that if I scaffold out half of it, maybe create the initial files and a single function or something and then it kind of fills in the rest, I have found it tends to work a little bit better. There's a few other tips that I can go into, but I don't know if that sort of helps. And that's how I've changed a little bit in how I've been using it because I've found that when you try doing the one shotting, it can just be too much that it's trying to do. And I feel like also it's too much context, especially I think one tip that I've heard continually with Goose and with others have a very clear what you considered a finished point and then move into a new context. Otherwise you can kind of go off the rails pretty fast.

Rizel Scarlett: Yeah, okay. I'll say this. I think my experience might be a little bit different just because when copilot came out, I had to demo GitHub copilot. So I was already thinking of, okay, how do I do this one-shot prompt that'll build out this whole thing so that people can be super impressed with me? So I think I was doing a lot of one-shot prompts and I probably brought over some of that learning from there. But

Rizel Scarlett: I think one thing I've been learning with building out the tutorials for Goose is kind of like what you're saying, how do I not let it get the context mixed up but still do a one-shot prompt? Because a lot of our tutorials, it's like we want to keep them short and sweet, so how do I make it do multiple things without overwhelming it or making it just fail? Sometimes it's like, I don't know what you want me to do, or it goes over the context limit. I leverage Goose hints a lot. So a lot of those different, most AI agents and AI tools have goose hints, cursor rules. I think Klein has its own thing as well. I dunno if you say Klein or Clean or whatever, but I dunno.

Richard Moot: I don't know what it is either.

Rizel Scarlett: Oh, okay. But they all have their own little, here's context for longer repeatable prompts. That way I use up less of the context window and I don't have to keep repeating myself like, Hey, make sure you set up this next JS thing. I'm already writing. We're going to use next JS and types script. We're going to use Tailwind or whatever it is, and then I can jump directly into the prompt that I want to do. And another thing I do, I don't know if this is weird, sometimes I ask Goose, how would you improve this prompt? I'll be like, I wrote this prompt and it failed. I'll open a new session. I'm like, how would you have improved this prompt? And it might give me a shorter one. So I don't know if I have these rules in my head, but I kind of just been really, really more experimental than I was in the past, I guess.

Richard Moot: Yeah, I think that's a really good thing to call out. I think that that's something that I had learned over time where even though I try to figure out a way to codify an approach, but I end up realizing these are just, I mean, it's weird. I feel like I'm going to describe this, I am the LLM, but they're tools and then you figure out, yeah, I try this one and it's not quite doing what I want, so I'm going to go try something else. And then I think what you said there was one that I've even tried and I didn't really even think about is so go, sometimes I'll switch LLM providers and I'd be like,I'm trying to do this over here and it's not working, and I give it, it gives me something else as a different context. I'm like, well, let's try and see if I feed that back over here, if that gets me the result I'm wanting. And so yeah, I think that's the biggest importance right now is to just be continually experimenting with it because I think as time goes on, we're going to end up learning different, I don't know, heuristics of shortcuts of trying to get what we want done. Sometimes I really like doing the one shots and then other times I'm like, I maybe scaffold something out and then I'm like, I'm just going to progressively iteratively work through this because I don't want it to. I think when I was trying to have it build an off service for an app, I was just too worried about it in one shoting it that I'd have one or two tiny bugs that are hard to catch somewhere.

Rizel Scarlett: That's true.

Richard Moot: And then I was just like, I really don't want to have to be going back through all these different methods and figure out like, oh yeah, I'm actually handling the Jot token incorrectly here. I'd rather just sort of progressively work through and feel confident in it and then be like, okay, I'll give a concrete example of one where I was using this library, I think it was called, it's a one-time password library for node js. I didn't realize that it was kind of really outdated and not maintained, and I implemented it in this app that I was working on, and I realized it wasn't working in the way that I anticipated, and then I was like, oh, there's an updated version of this library somewhere else that's more well adopted and being maintained. And so I was writing out the conversion, but I only converted say one of the sign-in function, and then once I had that one converted, I basically told my LLM, Hey, I'm switching from this library to this library and I've already done this particular function. Can you go through and update all of the other ones to use this new library?

Richard Moot: It did it, I would say nearly perfectly, which is pretty amazing. So I always find there's these little ways that you can be like, yeah, I'm going to do some of the manual work because it'll give me that confidence, but then lean on the LLM when I'm like, okay, I feel like I've done enough that it can finish it for me.

Rizel Scarlett: You know what? Now that you say more, it does make me think, I think I use a different workflow if I'm building versus I am doing developer advocacy work for it demoing or doing a tutorial. So if I am building, you're right, I probably first ask it what is its plan? And I do go more iteratively and I do try to do more of it and then let Goose jump in at certain areas. But if I'm doing a demonstration, I want it to be I do a one shot, which is an art in itself for it to be one shot and for it to be repeatable because AI is non-deterministic. So it could have worked with me once and I tried to demo it and then it never works again, and people were like, this didn't work. But yeah, I think that iterative process is really helpful for me when I say like, Hey, how are you going to go about this? And Okay, I'm going to do this part. You do this. And I always open up a new session when I feel like the conversation's been going long because I think, well, I know it loses context as it gets too big.

Richard Moot: Yeah, totally. I couldn't agree more on that part, and in fact, I've talked with some coworkers who've had mixed experiences with trying to use LLMs in their development work, but I think the thing that we just touched on there is that you have to just be dynamic in how you would use it and understand that you might not use it the same way in every context. And that's definitely what I've also learned when I've built out a fun example app for developer advocacy, just to build a proof of concept for the rest of my team to understand, hey, we can build an example and say next JS or Nest js or view or something, and I'm just using it to be like, Hey, I want you to one shot this out to basically get this mostly working to share something, but that's not how I build it When I'm like, Hey, I want to make this published and official for people to consume to say this is the way that you adopt Square. I would approach that very differently when using the LLM because I'd probably be curating my function signatures a little bit better and like, oh, this looks really good and understandable, and then have it fill out the rest

Richard Moot: Versus just one shotting things. If I was just going to have a one shot things, I would just probably tell people coming to our platform, so here's Goose, here's your LLM, go ahead and one shot your app.

Rizel Scarlett: That's all you need, just Goose. There you go. And I think you had mentioned a little earlier that different, sometimes you'll switch to a different LLM in addition to experimenting with those prompts. Different LLMs have different outputs, and I know on the roadmap we're planning to come out with, I guess different products come out with benches of here's how well this LLM works with our tool, so we're coming out with Goose Bench to say, okay, maybe if you want to do this type of process or build this type of app, then maybe Anthropics models might be best or maybe opening eyes or whatever.

Richard Moot: That would be really helpful because one other thing that I've been tinkering with when I try using an LLM for doing any kind of development work is I've actually, I think there's certain tools, I think one called Cursor New, but it basically just runs you through a series of give the project name a description, what libraries are using, and then it basically gives you prompts to feed in to create certain documents. But what I found interesting was the first one we'll do is say it'll help create a product requirements document, which I think we all call PRDs, but I'm just want to be super clear for people who might not know the lingo. And so I usually have it start out with creating the PRD, and then from there it'll create the code style guide and then it'll create your goose hints or cursor rules, and then I think finally it'll kind of create, I don't know how useful this one is, but it'll create a Read me of a progress tracker.

Richard Moot: That one I've found it's cool, but I've not found it to be totally useful because usually the only time it is useful is when I finally get to say the end of a task where I'm like, Hey, build out, scaffold out the project, and then at the end of it I say, go ahead and go update the progress file to clarify what has been built, what should be built next, and where are we at. Then I use that as the start of the next session of, Hey, check the progress thing to see where we are and what we need to build next, and then work from there. That's so smart. I like that it's really been useful for me, but at the same time, I would say by this fourth or fifth session, I don't know why it starts getting a little, I run into too many errors and I don't know if it's actually specific to the number of sessions or the particular feature that I'm building is maybe too complex and I need to spend more time breaking it into smaller pieces.

Rizel Scarlett: Interesting. I definitely, I want to try that on my own. I didn't think about saving it. I think the memory extension would do that as well for you maybe.

Richard Moot: Yeah, to be clear, I think in this instance I was using something like Cursor.

Rizel Scarlett: Okay.

Richard Moot: But I think I was wanting to try this with Goose. I do have the memory extension enabled, but I just haven't actually gotten to this is sort of what I've been doing on my own at home, but I definitely want to try this more with Goose, especially because Goose has goose hints and I can very easily convert my other rules to work for Goose. But yeah, it's been very useful for larger, more complex things that I've been building, but I still feel like it has its limitations.

Rizel Scarlett: Yeah, I like the challenge of figuring out what's stopping you and how do I get around this? And you're right, you did say you were using Cursor or some type of tool and I heard Goose in my head,

Richard Moot: But yeah, I found it really helpful just trying to just experiment with all the various different tools. There's a lot that I think all of us don't know. There's a lot of stuff to just keep figuring out. I just think that it's weird to say to someone like, Hey, I could tell you the different ways that you could use this, but I think right now most people should actually just figure out how it can work for them because I think if you go online, you can find people on all ends of the spectrum. There's some people they work on stuff that's so bespoke complex that they go, oh, LMS are just not useful for me. They're too bug ridden or the performance of the functions it creates isn't useful. I think those people are one end of the spectrum versus other of us who are like, oh my gosh, I spend most of my day doing architecture stuff like API architecture stuff, and so having an LLM to do the actual implementation of a design is huge unlock because I might come up with a great API design, but then I'm like, this is going to take me so long to code up and an LLM feels like a huge unlock,

Rizel Scarlett: And I really resonate with your point of figure out what works for you. You really just got to tinker with it and be like, I want to get this done and figure out how it'll apply to you because like you said, I could tell people one way, but I might not work in the same way as they do or I'm not working as complex stuff as them. Yeah,

Richard Moot: And not to anthropomorphize it too much, but I feel like it's probably not too dissimilar if somebody just said, Hey, out of nowhere they said, Hey, we gave you an assistant. You'd be like, I don't even know what I'm, you'd at first be like, what do I use the assistant for? Can you organize my files? It takes you a while to even figure out, okay, what can you do? What are you good at? You don't know until you actually have that, and so I think we all have to just start interacting with it and then we'll figure out where we actually want help. You might find out there's certain areas where we don't want it to help us in these things, but we do want it to help us in those other things.

Rizel Scarlett: Get to know the lm.

Richard Moot: Exactly. Yeah. Even the people who think, oh, I don't really like it for coding. You're like, yeah, but you might find out you hate writing super long complicated emails or reading super long, complicated emails. You'd be like, Hey, can you go ahead and give me the TLDR of this or write an email form? It can be that simple.

Rizel Scarlett

Sometimes I use LLMs to make sure my emails sound polite. Sometimes my emails don't come out polite, even though I'm not even throwing any shade, so if it sounds like I'm throwing shade, take it out.

Richard Moot: That's great. I think more people should be using that. Maybe I'm biased because being in the Devereux space, you get to interact with all kinds of folks.

Rizel Scarlett: Yes, that's true.

Richard Moot: And they all have very different communication styles, not to name names. We did have one person who in this square community who I actually was very thankful for them, but they spent so much time just finding every single bug in our APIs, and it drove some people a little bit crazy like, oh my gosh, he found another one, but then I'm just sitting here just like, yeah, thank you. This is free qa. This is amazing. I'm going to keep encouraging this person to keep saying this stuff. I don't find it annoying in the least

Rizel Scarlett: I could get it. I could understand it on both sides. As an engineer, you're like, no more work, but as a developer advocate, you're like, yay, my product's getting improved,

Richard Moot: And it's validating. They love the product so much, they want it to be better, so of course, let's go do that.

Rizel Scarlett: It's true. I love that.